If you have a large collection of music files (mp3, etc) and want to easily manage them on desktop and mobile, you’ll have many apps to choose from. After testing some of the highly rated ones, I’ve (for now) settled on what I think are the two best: MusicBee for desktop and Omnia for mobile. This article will go over how I use these two apps to easily manage almost 2000 songs.

MusicBee

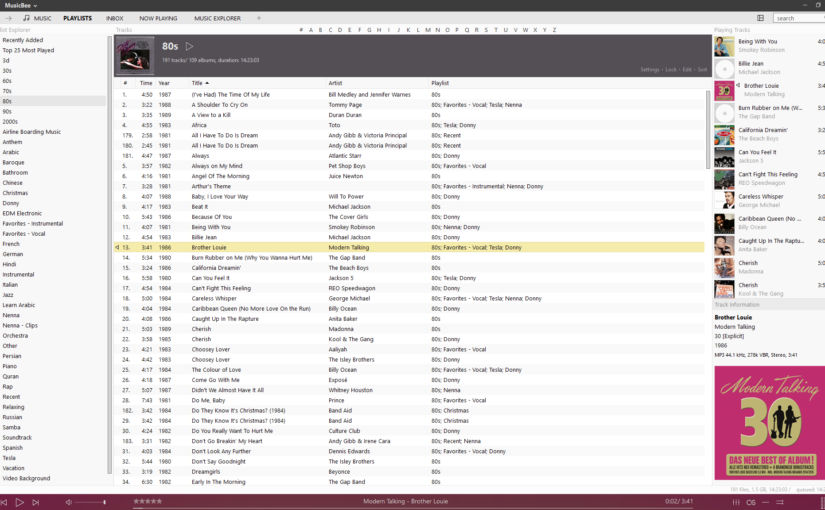

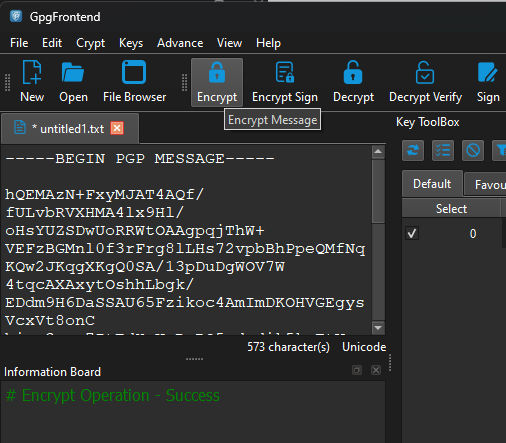

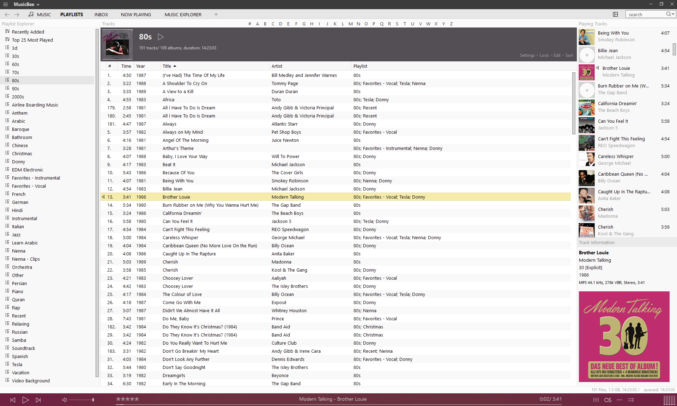

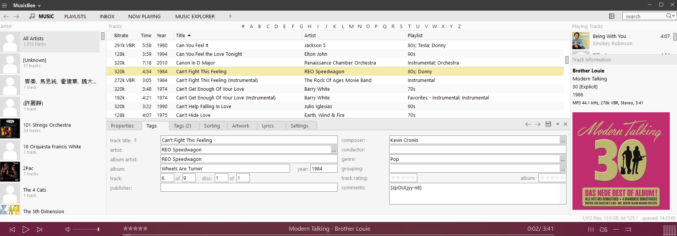

At this time, MusicBee is only available for Windows. Below is a screenshot of my MusicBee instance. The UI is customizable, which is great.

Header Bar

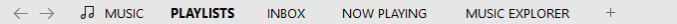

For the header bar, I customized it to have just what I care about, specifically

- MUSIC (lists all music)

- PLAYLISTS (lists all playlists in the left panel and all music within a playlist in the middle panel)

- INBOX (I use this as a temporary staging location when I add new tracks to MusicBee)

- NOW PLAYING

- MUSIC EXPLORER (lets you browse by artist, showing albums for each artist)

To edit the header bar tabs,

- right-click on a tab and click “Close tab” to remove it

- click the + icon to add a new tab

“MUSIC” Tab

When I click the MUSIC tab in the header bar, I see this:

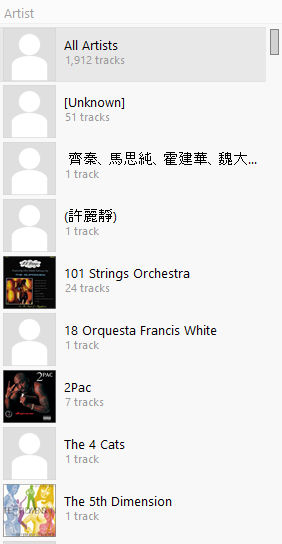

Left Sidebar

The left sidebar shows a list of all artists. The very first option is “All Artists”. I click “All Artists’ to show a list of all my music files in the middle pane.

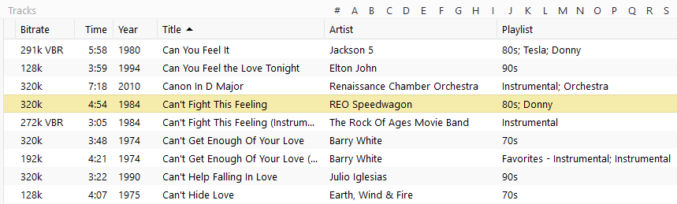

Middle Pane

The middle pane shows the filtered music tracks. I customized the columns to just what I care about, specifically

- Bitrate (I use this to check the encoding bitrate. If a bitrate is too low, I may replace the track with one with a higher bitrate).

- Time (the song’s duration)

- Year (the year the song was released)

- Title (the title of the song)

- Artist (the name of the song’s artist)

- Playlist (a comma-delimited list of playlists a song is in)

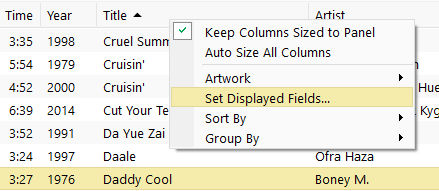

To change the columns, right-click on the header and click “Set Displayed Fields…”

Bottom Middle Panel

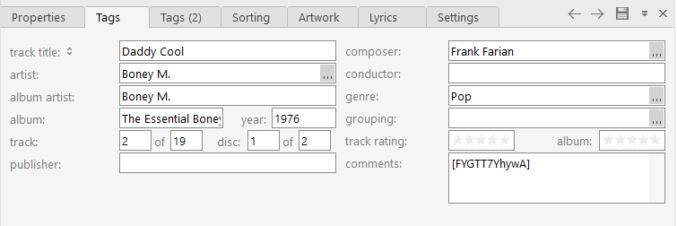

In the middle, below the track list, is an optional pane for editing a song’s properties. I normally enter the following metadata:

- Title

- Artist

- Year

- Comments (the YouTube video ID if I ripped the song from YouTube)

Bottom Right Panel

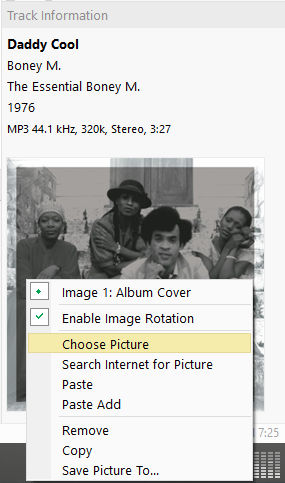

In the bottom right panel, you can see a song’s artwork. You can change the article by right-clicking and browsing to an image on your computer. The image should be a square, e.g. 500 x 500 px.

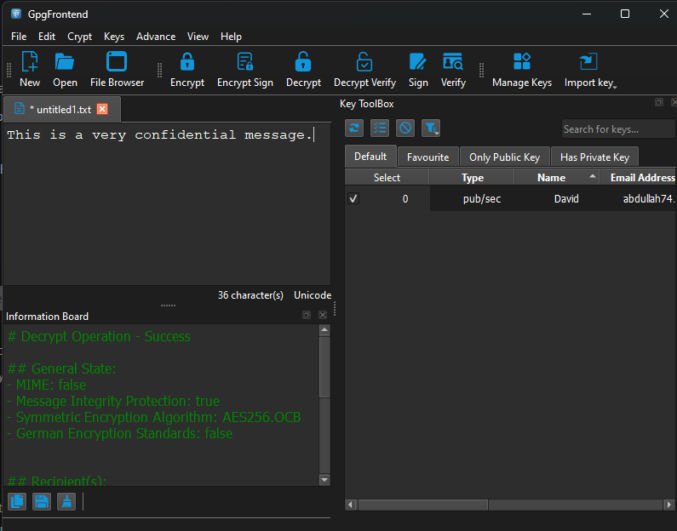

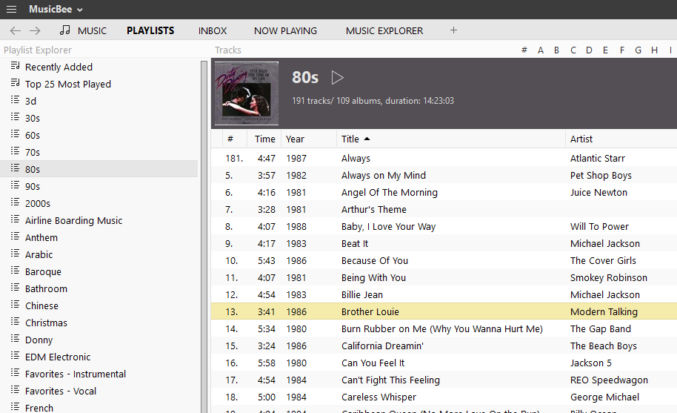

“PLAYLISTS” Tab

When I click the “PLAYLISTS” tab, I see the following:

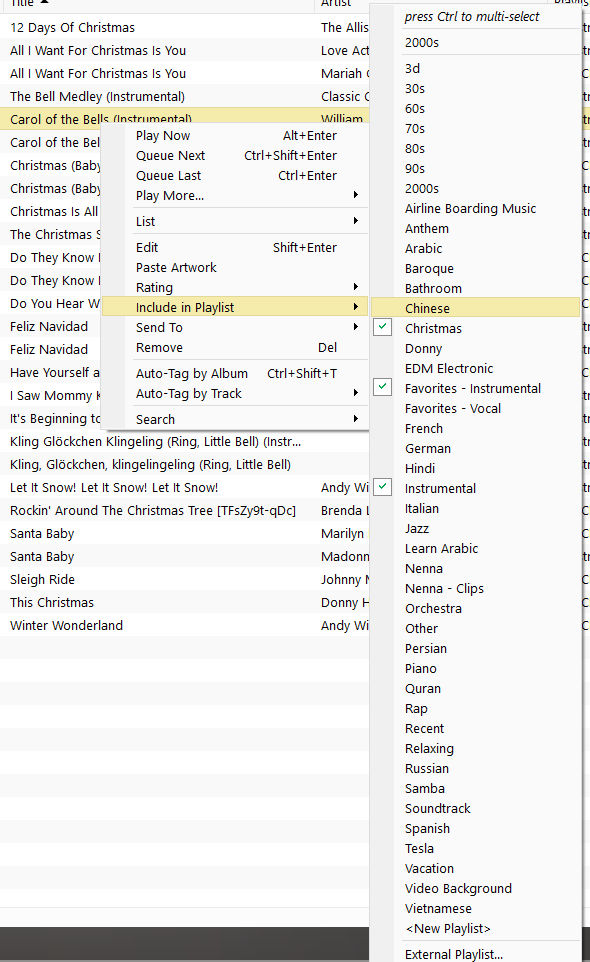

In the left sidebar, I see a list of playlists. In the middle panel, I see the list of song tracks. To create a playlist or add/remove a track from/to a playlist, right-click on a track, click ‘Include in Playlist”, and either

- click “<New Playlist>” at the bottom to create a new playlist

- click or ctrl+click one or more existing playlists to add the song to the playlist(s)

Playlist Format

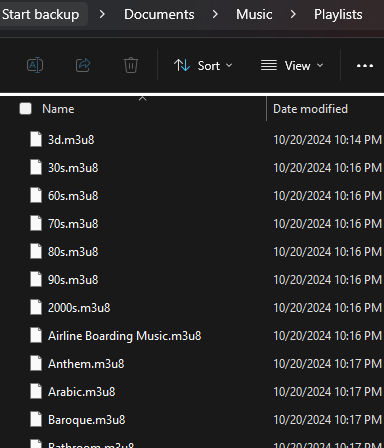

My music files are all in a single folder called “Music”.

Within that folder, I have a subfolder called “Playlists” containing all my playlist files.

I export my playlists in m3u8 format with relative paths. This allows me to copy my entire “Music” folder, including “Playlists” subfolder” to another device, like my phone or tablet, and the music player on the other device should be able to read my playlists and referenced music files without error.

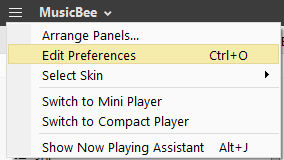

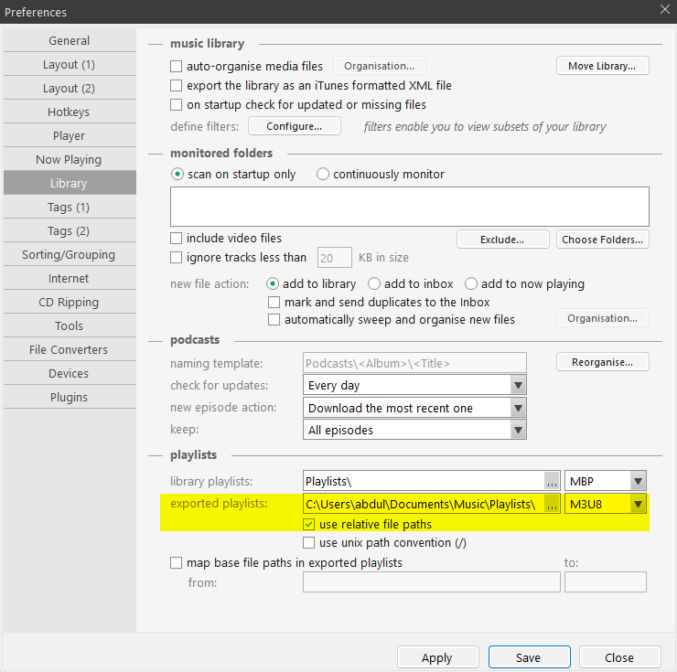

To set the playlist file format and path preference, click the hamburger menu in the top left corner, then click “Edit Preferences”.

Then, click “Library” in the left sidebar and then select “M3U8” and check the “use relative file paths” as shown below.

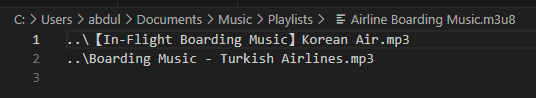

Now, if you export a playlist and open the playlist m3u8 file in a text editor, you’ll see relative paths to each song in the playlist like this

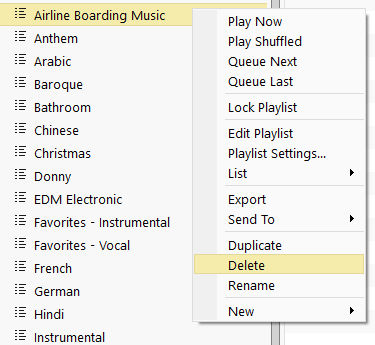

Rename, Delete and Export a Playlist

To rename or delete a playlist, click on the playlist in the left sidebar and click the corresponding option.

Omnia

Omnia is now my preferred app for mobile and tablet. It is currently only available on Android.

Omnia is pretty simple and intuitive to use except when you update playlists.

First-time use

When you use Omnia for the first time, tell Omnia where your music and playlist files are.

- Click Settings > Music Folders and specify a folder path. Omnia will scan the folder for all music files.

- Once the files have been scanned, click on “Songs” tab in the main view to verify your songs are listed.

- Then, click on the “Playlists” tab to verify the playlists were loaded. If they weren’t, click on the 3 vertical dots in the top-right corner, click “Import”, click “SELECT ALL’, then check all playlists, and then click the “OK” button.

Subsequent use

If you’ve updated your music file library and playlists, you’ll need to

- click on the 3 vertical dots in the top-right corner, click “Rescan Library”, to get updated files.

- click the 3 vertical dots to the right of each playlist, and then click “Delete”, to delete the playlists that have been updated

- click on the 3 vertical dots in the top-right corner, click “Import”, click one or all playlists, then click “OK” to load the updated playlist.

Workflow

This is my workflow for acquiring music, adding it to MusicBee, and transferring it to Omnia on another device.

- Buy a song on Amazon Digital Music ($1 / song) or download a song as mp3 from YouTube using yt-dlp. I store the new files in a temporary “Music” folder.

- Edit the song in Audacity as necessary, e.g.

- trim out start and end silence

- normalize the volume

- Copy the music files to the folder containing all other music files.

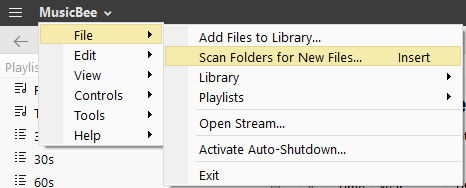

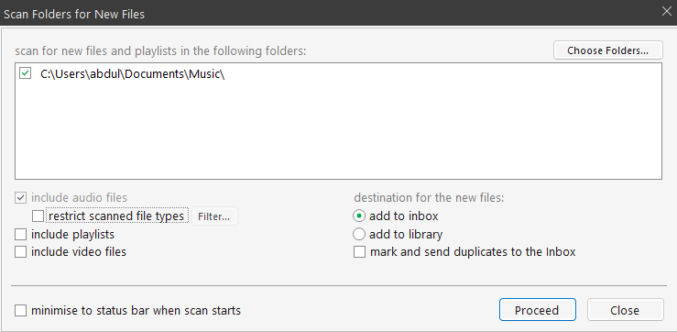

- Import the new music files into the “Inbox” in MusicBee clicking “MusicBee” > File > ‘Scan Folders for New Files…”

I then specify the folder containing all my music, select “add to inbox”, which is a temporary staging area, and then click “Proceed”.

The new music will appear in the “INBOX” tab in MusicBee.

- Edit each song’s metadata (title, artist, year, etc) and add the song to existing playlists

- Move (send) the songs from the “INBOX” to the main “MUSIC LIBRARY”.

- Export any playlists that have been updated

- Connect my other device (phone / tablet) to my laptop

- Copy the music files from the temporary folder on my laptop to the external device

- Copy the updated playlist files from my laptop to the external device

- In Omnia in the external device, rescan the music library, delete and reimport any updated playlists