Artificial Intelligence (AI) is about teaching computers to do smart things that normally require human intelligence — such as understanding language, recognizing faces, playing games, or creating art and music.

AI learns from lots of examples so it can notice patterns. For example, if you show AI thousands of pictures of cats and dogs, it will eventually know the difference between what a cat looks like and what a dog looks like. It doesn’t know what a cat or dog does; it just knows what a cat or dog looks like. Data is the fuel of AI. The more data and the cleaner the data, the better the AI is. AI improves by trial and error. Initially, AI will guess what a cat or dog looks like. If it makes a mistake and humans correct it, AI will learn and, eventually, not make the same mistake.

Huge Neural Networks

AI uses neural networks, which are like a simplified brain. Large Language Model (LLM) and Diffusion Model are two types of huge networks. These models are trained on billions of examples from the internet.

| Feature | LLM (Large Language Model) | Diffusion Model |

|---|---|---|

| Main goal | Generate or understand text | Generate or edit images/videos |

| Input type | Words / sentences | Text (as a prompt) + random noise |

| Output type | Text (e.g., paragraphs, code, chat) | Visuals (e.g., images, videos) |

| How it learns | Predicts the next word | Learns to reverse noise and create images |

| Examples | ChatGPT, Claude, Gemini | Midjourney, Stable Diffusion, DALL·E, Runway, Kling |

AI as a Classroom Analogy

Imagine a big school containing teachers (humans) and students (AIs).

The Teacher (Humans)

The teachers (humans) give the students (AIs) tons of examples: books, images, songs, videos, code — everything. The students don’t just memorize — they practice until they can do similar things on their own.

The LLM Student (AI)

One student, Lucy the LLM, loves reading and writing. She studies every book in the library and learns:

“After the words ‘Once upon a’, the next word is usually ‘time’.”

She becomes amazing at predicting the next word and can write essays, stories, or even hold a conversation — because she knows how words fit together.

Lucy = Large Language Model (writes and speaks intelligently).

The Diffusion Student (AI)

Another student, Danny the Diffusion Model, loves art. His training exercise:

- The teacher shows him a picture.

- Then they cover it with random paint splatters.

- Danny learns to carefully “un-splatter” the image until it looks clear again.

After years of practice, Danny can now start with a blank canvas (just random dots) and, when you say “a cat wearing sunglasses,” he paints that from scratch.

Danny = Diffusion Model (paints images from words).

The Big Picture

Both Lucy and Danny are smart in different ways:

- Lucy talks and writes (text world 🌍).

- Danny paints and visualizes (image world 🎨).

They often work together — Lucy writes the idea, Danny draws it.

Types of AI

| Category | Description | Example |

|---|---|---|

| Narrow AI | Specialized, task-based AI | ChatGPT |

| Generative AI | Creates new content | DALL·E, Runway |

| Agentic AI | Acts independently to achieve goals | AutoGPT, Devin |

| Analytical AI | Analyzes large data | Fraud detection |

| Predictive AI | Forecasts outcomes | Stock or weather models |

| Conversational AI | Talks with humans | ChatGPT, Siri |

| Robotic AI | Moves and interacts physically | Drones, factory robots |

- Machine Learning teaches AI patterns.

- Generative AI teaches AI creativity.

- Agentic AI teaches AI action and autonomy.

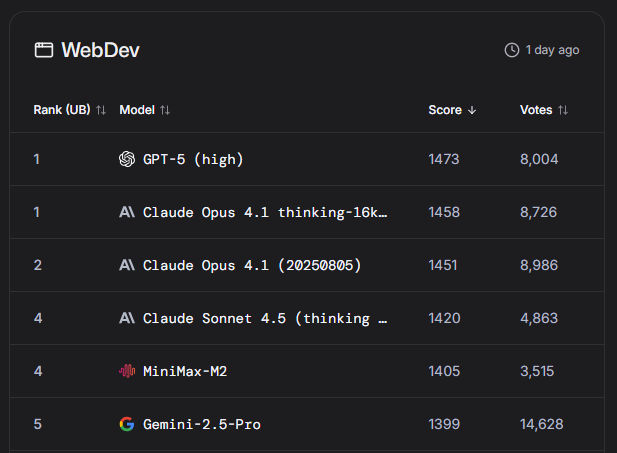

AI Leaderboard

There are many AI models by different creators, but some are more intelligent than others. This leaderboard ranks AI models based on intelligence score.

Some models are better than others at specific tasks. The following leaderboards compare leading models by

Here’s the current top-5 leaderboard for web development as of Nov 11, 2025.

AI, CPUs, and GPUs

| Type | Analogy | Good For |

|---|---|---|

| CPU | A brilliant chef cooking one perfect dish at a time | Complex logic, single-thread tasks |

| GPU | A kitchen with 1,000 chefs making the same dish simultaneously | Massive parallel work (like AI math) |

Originally, GPUs were built for graphics — drawing images and 3D scenes in video games.

- CPUs (central processing units) are like smart workers — great at doing one task at a time, but carefully.

- GPUs are like armies of workers — they can do thousands of small calculations at once.

What would take a CPU weeks to do, a GPU can do in hours.

AI depends heavily on GPUS.

Nvidia, the company that makes most AI GPUs (like the H100 and A100), has become one of the most valuable companies in the world because of AI demand.

Data centers around the world are being built specifically to host GPU farms — giant rooms filled with thousands of GPUs that power AI models.

Cloud providers (like AWS, RunPod, or Google Cloud) rent out GPU power so smaller developers can build and test AI apps without owning hardware.

AI Training VS Inference

There are two main stages of AI:

1. Training (Learning)

This is when the AI learns from data. For example, teaching an AI to recognize cats by showing it millions of cat photos. It requires huge amounts of computation and it needs massive GPU clusters — sometimes thousands of GPUs working together for weeks or months.

Think of it like “going to school.”

2. Inference (Using What It Learned)

Once trained, the AI can now use what it knows — answering questions, generating images, etc. It still uses GPUs, but fewer — since it’s now recalling knowledge rather than learning it.

Think of it like “taking an exam” — it’s using what it learned efficiently.

AI Hallucinations

An AI hallucination is when an AI makes up something false but presents it as true.

It happens because AIs don’t know truth — they just predict what sounds right based on pattern-matching. For example,

| AI Type | What “Hallucination” Looks Like |

|---|---|

| LLM (ChatGPT, Claude) | Makes up fake facts, quotes, sources, or people |

| Image Model (Midjourney, DALL·E) | Adds random visual details that weren’t in the prompt (like extra fingers 👋 or distorted objects) |

| Video / Audio Models | Create unrealistic motion, or mis-sync voices and faces |

Hugging Face

Hugging Face is basically the GitHub of Artificial Intelligence. It’s a giant online platform where people share, explore, and use AI models, datasets, and tools — all in one place. At Hugging Face, you’ll find

| Section | What It Offers | Example |

|---|---|---|

| Models | Pre-trained AI models (text, image, audio, etc.) | ChatGPT-like LLMs, Stable Diffusion, Whisper |

| Datasets | Large collections of data used to train AIs | Wikipedia text, image caption sets, code samples |

| Spaces | Interactive apps people build with AI | You can test image generators, chatbots, translators |

| Transformers Library | Hugging Face’s open-source code that makes it easy to use models | Used by researchers, developers, and hobbyists everywhere |

Imagine you want to build an app that turns spoken words into text, translates it to French, and then summarizes it. On Hugging Face, you can:

- Search for a speech-to-text model (like OpenAI’s Whisper).

- Add a translation model (like Helsinki-NLP English-to-French).

- Plug in a summarizer (like BART or T5).

- Run it all with just a few lines of Python code using the

transformerslibrary.

You don’t have to train anything from scratch — it’s all there, ready to go.

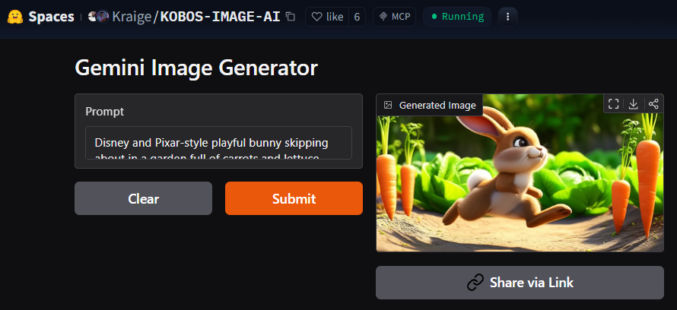

Hugging Face Spaces is like GitHub Pages – it’s where developers can host their AI models and provide a simple UI using Gradio, a simple web interface to demo an AI app. For example, you can search for “text to image”, click on a result, like Gemini Image Generator, and test the model in a browser.

You can also use models in your own code using Python. For example,

- Install the transformers library:

pip install transformers

- Load a model (for example, a text generator):

from transformers import pipeline

generator = pipeline("text-generation", model="gpt2")

result = generator("Once upon a time, there was a cat", max_length=30)

print(result[0]["generated_text"])ComfyUI

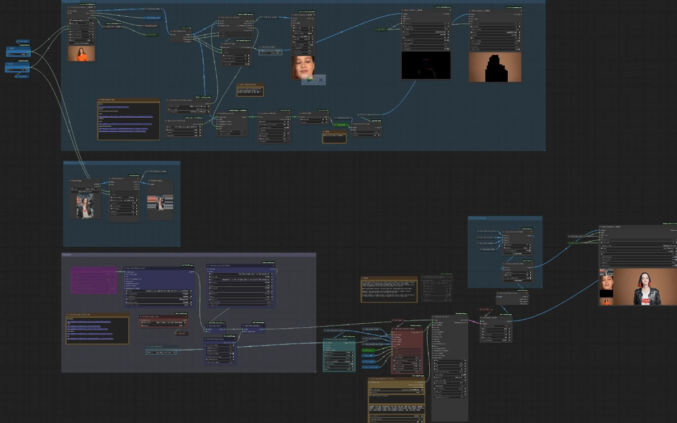

ComfyUI is a visual, node-based interface for creating AI images and videos. It’s a drag-and-drop app that lets you build your own AI image or video generator. Instead of typing long code, you build a “workflow” by connecting blocks called nodes. Each node does one thing:

- One node loads your model

- One node reads your text prompt

- One node generates an image

- Another node might upscale, add depth, or edit colors

You can run ComfyUI locally on your computer, but you need a lot of space and a powerful computer, preferably with a GPU. These computers are expensive. Alternatively, you can run ComfyUI in a browser at ComfyCloud, RunComfy, and ThinkDiffusion, where you can rent powerful GPUs and pay only for when you’re using the service.

Image and Video AI Playground

You can test some of the leading image and video AI models at RunComfy’s playground.