Usually, people create AI videos by describing what they want using a text prompt. This can be very difficult depending on the results you are looking for. Another way to create an AI video is by creating a “driving performance” video, which shows what movements you want to mimic. For example, if you want to make a video of yourself dancing and lip syncing exactly like someone else in an existing video, you can upload the existing video as a “driving performance” video and upload an image of yourself as the “character image”. This post explains how to do it using Runway Act-Two.

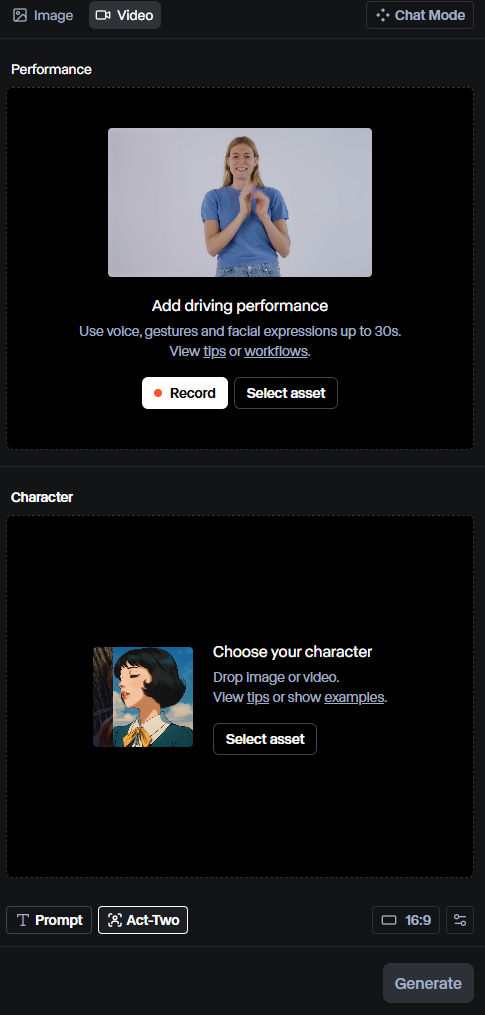

In Runway, click on Act-Two. You will see the UI below.

In the top section, you upload a “driving performance” video, which will contain the body movements and facial gestures you want to copy and apply to your character in the bottom section.

In the bottom section, your character can come from an image or video that you upload. For simplicity, and to match the driving video, I will upload an image containing my character.

For demonstration purposes, I want to make myself sing and dance exactly like the subject in the following video.

Runway Act-Two provides the following recommendations for driving performance videos.

- Feature a single subject in the video

- Ensure the subject’s face remains visible throughout the video

- Frame the subject, at furthest, from the waist up

- Well-lit, with defined facial features and expressions

- Certain expressions, such as sticking out a tongue, are not supported

- No cuts that interrupt the shot

- Ensure the performance follows our Trust & Safety standards

- [Gestures] Ensure that the subject’s hands are in-frame at the start of the video

- [Gestures] Start in a similar pose to your character input for the best results

- [Gestures] Opt for natural movement rather than excessive or abrupt movement

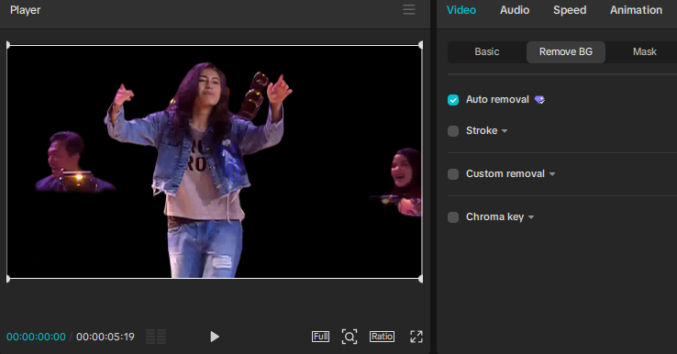

Since my “driving performance” video has people playing music in the background, I need to remove them. One way is by using Capcut’s Auto Background Removal feature.

While it’s not perfect, it may be sufficient for Runway’s Act-Two. Here are two other AI-based video background removal tools that seem to do a better job.

VideoBgRemover.com seems to produce the best results. However, for this test, I used the imperfect results from Capcut.

If you need more accurate subject isolation or background removal, use Adobe AfterEffects’ RotoBrush feature.

DRIVING PERFORMANCE VIDEO

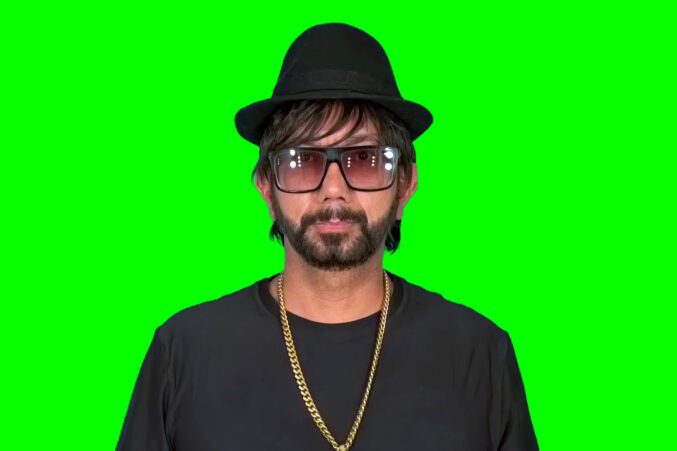

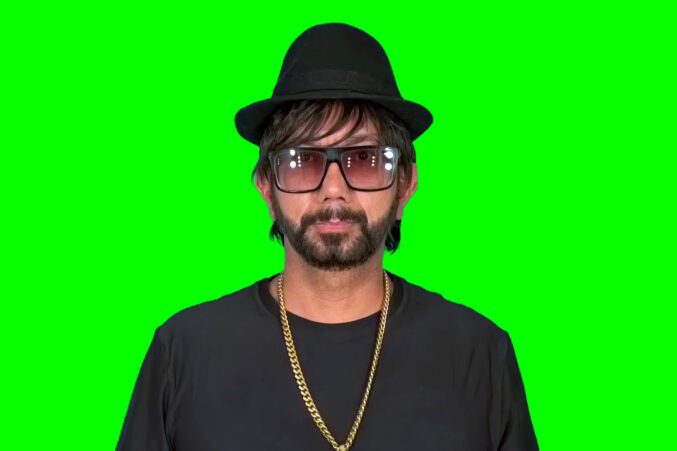

CHARACTER IMAGE

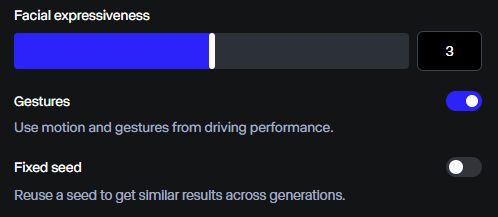

Runway Act-Two provides the following recommendations for character images.

- Feature a single subject

- Frame the subject, at furthest, from the waist up

- Subject has defined facial features (such as mouth and eyes)

- Ensure the image follows our Trust & Safety standards

- [Gestures] Ensure that the subject’s hands are in-frame at the start of the video

For the character image settings, make sure “Gestures” is toggled on.

OUTPUT

To show just how close the generated video matches the source, I overlaid it on the source.

That looks pretty impressive to me.

Here are more examples.

INPUT – Performance Video

INPUT – Character Image

OUTPUT

OUTPUT – Overlaid

INPUT – Performance Video

INPUT – Character Image

OUTPUT

OUTPUT – Overlaid

INPUT – Performance Video

ERROR

INPUT – Performance Video

ERROR

INPUT – Performance Video

ERROR

INPUT – Performance Video

Since the subject in the previous video was too small, I scaled it up in Capcut. Now, Runway Act-Two was able to detect the face.

INPUT – Character Image

OUTPUT

OUTPUT – Overlaid

Since I wasn’t able to put myself behind the metal railing, I scaled up the source video to hide the railing.

INPUT – Performance Video

ERROR

INPUT – Performance Video

INPUT – Character Image

OUTPUT

OUTPUT – Overlaid

INPUT

I used VideoBgRemover.com to remove the background.

OUTPUT

OUTPUT – Overlaid

INPUT – Performance Video

INPUT – Character Image

OUTPUT

OUTPUT – Overlaid